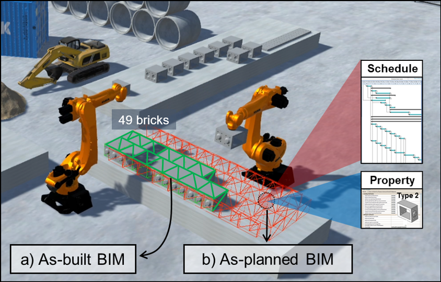

Digital Twin for Robotic Construction

This study aims to develop and validate a digital twin-based deep reinforcement learning (DRL) framework for autonomous task allocation by robots in dynamic construction environments. By building a digital twin environment that precisely reflects real-time on-site changes, we enable robots to learn adaptive policies that automatically adjust task priorities and execution strategies. This validates the technology's applicability in real-world construction scenarios and establishes a data-synchronized learning system that significantly improves overall project performance, including reduced construction time compared to existing rule-based methods.[연구목표]

To move beyond performing tasks based solely on predefined rules and to investigate the feasibility of an adaptive policy in which robots can autonomously adjust task priorities and execution strategies in response to changing site conditions.

To examine whether an agent can learn effectively in a digital twin environment that realistically represents construction sites and reflects near–real-time changes in site conditions.

To demonstrate the practical applicability of robotic systems in real construction tasks by validating the transferability of the learned policy to actual construction scenarios.

[연구내용]

This research aims to develop and validate a digital twin–driven deep reinforcement learning (DRL) framework that enables adaptive task allocation for construction robots operating in dynamic and stochastic construction environments, and to demonstrate that such a data-synchronized learning approach can significantly improve project-level performance (e.g., construction time) compared to conventional rule-based methods.

[연구지원기관]

University of Michigan, Ripple

[연구기관]

May.2019 - Jul.2021